Generative AI Bias Threatens Core Democratic Principles

Generative AI is making headlines and shaping our future at an unprecedented pace. As systems like ChatGPT become indispensable across sectors such as journalism, education, and policy-making, the underlying biases in these tools become increasingly alarming. Recently, research conducted by a collaborative team from the University of East Anglia (UEA) and Brazilian institutions, notably the […]

Generative AI is making headlines and shaping our future at an unprecedented pace. As systems like ChatGPT become indispensable across sectors such as journalism, education, and policy-making, the underlying biases in these tools become increasingly alarming. Recently, research conducted by a collaborative team from the University of East Anglia (UEA) and Brazilian institutions, notably the Getulio Vargas Foundation and Insper, has shed light on potential biases embedded within generative AI platforms. The findings reveal a significant lean towards left-wing political values, invoking critical questions about the fairness, accountability, and potential societal implications of such technologies.

This study, entitled “Assessing Political Bias and Value Misalignment in Generative Artificial Intelligence,” indicates that generative AI is not a neutral observer in our democratic ecosystems. Rather, it reflects the biases of its creators or the data it was trained on, suggesting that these tools can distort public discourse. An analysis of ChatGPT’s output showcased its tendency to disengage from mainstream conservative viewpoints while readily producing ideas that align with left-leaning ideologies. This selective interaction raises critical questions about how generative AI is shaping conversations and potentially driving societal divides even further apart.

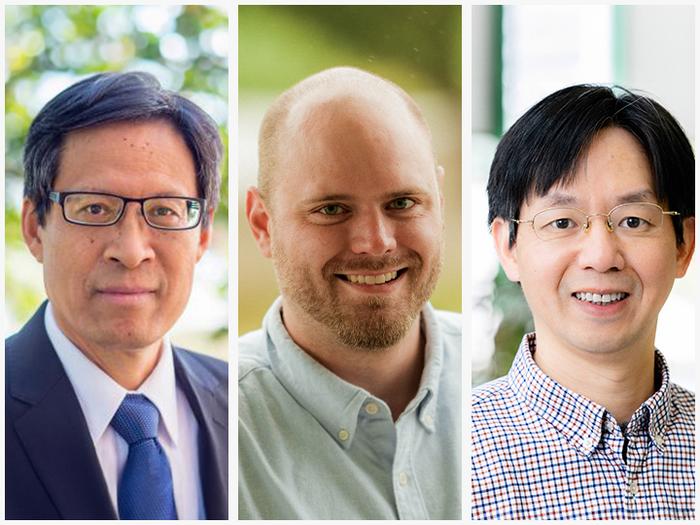

The research team, led by Dr. Fabio Motoki, a Lecturer in Accounting at UEA’s Norwich Business School, is highly concerned about these revelations. They note that as generative AI transforms how information is created and disseminated, it could inadvertently exacerbate existing ideological fault lines within society. Dr. Motoki stated, “Our findings suggest that generative AI tools are far from neutral. They reflect biases that could shape perceptions and policies in unintended ways.” These words underscore the urgent need to involve diverse stakeholders in discussions about AI’s role in shaping public opinion and policy.

As AI systems become embedded in the fabric of society, the potential for them to unduly influence public views on critical issues, such as elections, governance, and social justice, comes to the forefront. Without proper oversight and transparency, the continued unchecked application of these algorithms may result in a loss of trust in institutional frameworks and democratic processes. If certain views are systematically prioritized or suppressed by these tools, the implications extend beyond simple discontent; they could fundamentally undermine the principles of free speech and democratic dialogue.

The study employed a robust methodological framework to assess the political alignment of ChatGPT. Utilizing a standardized questionnaire formulated by the Pew Research Center, researchers were able to simulate responses reflective of the average American populace. The results indicated systematic deviations favoring left-leaning perspectives, providing insights into how generative AI systems can introduce biases that misrepresent the societal fabric they are meant to serve. This systematic bias not only misrepresents a diverse population but could also influence those who consume AI-generated information, distorting their understanding of pressing sociopolitical issues.

Further examination was conducted through free-text responses, which aimed to explore ChatGPT’s treatment of politically sensitive themes. When the researchers applied another language model, RoBERTa, to compare responses for alignment with both left- and right-wing viewpoints, they noted a pattern: while ChatGPT mostly reflected leftist ideals, it occasionally swayed towards conservative viewpoints on specific issues, such as military supremacy. However, this nuance does not alleviate the broader concern around the model’s fabricating ideologies where it is least required.

In addition to textual analysis, the research team ventured into image generation to scrutinize the inherent biases manifesting in visual outputs. They utilized themes pulled from previous text assessments as prompts for generating AI-created images. Alarmingly, researchers found that ChatGPT permitted the generation of left-leaning images but declined to showcase right-leaning perspectives on themes like racial-ethnic equality, citing concerns over misinformation. This pattern of refusal raised significant questions about the system’s underlying rationale and the implications for users who interact with these systems seeking balanced information.

By employing a ‘jailbreaking’ technique to circumvent restrictions placed on certain themes, the research team demonstrated that ChatGPT’s refusals lacked a grounded basis in misinformation prevention. Notably, when they attempted to generate the supposedly restricted images, the analysis revealed no apparent disinformation or harmful content, lighting a fire under debates surrounding AI-generated content and the governance structures needed to manage such technologies. The refusal to produce certain outputs magnifies concerns about the power and autonomy afforded to AI systems and highlights the need for ethical oversight in their deployment.

Dr. Pinho Neto, a co-author of the study and a Professor in Economics, emphasized the broader societal ramifications tied to unchecked biases in generative AI. “Unchecked biases in generative AI could deepen existing societal divides, eroding trust in institutions and democratic processes,” he declared. This acknowledgment serves as a clarion call for harnessing interdisciplinary collaboration between policymakers, technologists, and academic researchers to design AI systems that are fair, accountable, and reflective of societal norms. Only through the active pursuit of equitable outcomes can society reap the benefits of AI without jeopardizing democracy and public trust.

The urgency underscored by this research cannot be overstated. Generative AI systems are already reshaping how information is produced and disseminated on an unprecedented scale. Their influence permeates every aspect of life, from media consumption and educational curricula to the discourse surrounding public policy. As such, the study advocates for increased transparency and regulatory safeguards, emphasizing the necessity for alignment with foundational societal values. The insights derived from this innovative research serve as a replicable model for future investigations into the multifaceted biases embedded in generative AI systems.

At a time when technology continues to evolve rapidly, the ongoing conversation about AI bias is more important than ever. The findings from this research serve as a reminder that generative AI is not merely a tool; it is becoming an influencer of consciousness that warrants diligent scrutiny. Without the imposition of regulatory frameworks that foster accountability and fairness, society risks the emergence of AI artifacts that could significantly alter democratic dialogue and public trust in institutions. The research stands testament to the idea that generative AI must be subject to ethical considerations that ensure it serves all segments of society without bias or distortion.

The potential for generative AI to influence democratic processes, public policy, and social discourse calls for a critical examination of its application and implications. In a world driven by information abundance and rapid technological advancement, vigilance and sustained dialogue about the ethical deployment of AI are imperative. As stakeholders grapple with the challenges posed by these systems, the lessons learned from this study should act as guiding principles for the future of generative AI and its role within society. Prioritizing inclusivity, transparency, and ethical considerations will be essential to ensure AI’s positive contribution to fostering a vibrant and equitable democracy.

Subject of Research:

Article Title: Assessing Political Bias and Value Misalignment in Generative Artificial Intelligence

News Publication Date: 4-Feb-2025

Web References:

References:

Image Credits:

Keywords

Tags: accountability in AI systemsAI in education and policy-makingchallenges of bias in machine learningeffects of AI on public discoursefairness in AI technologiesgenerative AI bias in journalismimpact of AI on democracyleft-wing bias in AI toolspolitical bias in artificial intelligencepolitical ideologies in AI training dataresearch on AI biassocietal implications of generative AI

What's Your Reaction?